Technology & Innovation

AI in Clinical Radiology: Addressing Core Medical Imaging Diagnosis Challenges

January 27, 2026 - Purva Shah

Clinical radiology data is becoming increasingly difficult to interpret, considering the complexity of datasets and sources. Handling rising scan numbers while dealing with insufficient staff and analyzing complicated data from multiple imaging modalities adds burden on radiology teams. Artificial intelligence (AI) technology addresses this issue through its ability to enhance medical image quality, detect abnormalities, and combine information from medical scans, electronic health records (EHRs), and biological signals. Deep learning (DL) methods can leverage radiomics to extract hidden patterns from medical images (CT, MRI, PET) that can’t be detected by humans. It also helps in performing anomaly detection, predictive modeling, and image-quality enhancement.

AI models perform automated preprocessing operations, feature extraction tasks, and generate organized reports, which present results through detailed analysis. These intelligence systems can enable radiologists, technologists, and administrators to use preventive tools, which help them perform early medical diagnoses and individualized patient care while minimizing treatment variability between healthcare facilities.

This article (part one of a two-part series) provides an overview of the traditional radiology workflow, its limitations, and the potential for AI to augment efficient imaging pipelines.

The healthcare industry now produces large amounts of data which researchers predict will become the major portion of total global data in the next 10 years1. Medical imaging data from MRI, CT, and PET scans now commonly integrates with genomics and proteomics information. (The field of genomics studies the genetic information of the individual, whereas proteomics investigates the proteins which result from gene expression.) Multimodal datasets are formed through two sources, which include wearable sensor data and large amounts of unstructured EHR information.

While the radiology department continues to generate large amounts of information, it follows the traditional workflow process that is modality-driven. To improve the effectiveness of data generation and processing, the conventional radiology workflow serves as the basis for modern medical imaging-based clinical operations.

Conventional Radiology Workflow

The traditional radiology workflow starts when patients receive their imaging scans done for CT, MRI, PET, and ultrasound modalities. These systems need specific hardware components which function to detect biological signals from the human body. The hardware-driven system processes raw signals through cleaning and strengthening operations before digital conversion and produces precise digital output without any noise.

Once the system captures the scan data, it is converted into standard digital imaging and communications in medicine (DICOM) images. The radiology field uses DICOM as its standard format, which enables medical staff to access and distribute both images and patient data through a single platform.

After the images are generated, they are sent to the picture archiving and communication system (PACS). The central storage and retrieval hub of PACS enables radiologists and clinicians to access, compare, and archive imaging studies which they need for patient care. The PACS system displays digital data through specialized processing units, which scale up the images for radiologists to perform manual image interpretation. The process requires experts to view display screens for lesion identification and area of interest detection while they use their medical knowledge and visual assessment skills to define problem areas.

Reporting via such methods of individual analysis are often semi-structured or narrative in nature and presented in textual format. With no automated, standardized set of checks for the analysis, workflows are left in the hands of individual radiologists for interpretation. Diagnostics are completely dependent on the interpretation, professional expertise, and mental processing abilities of each individual.

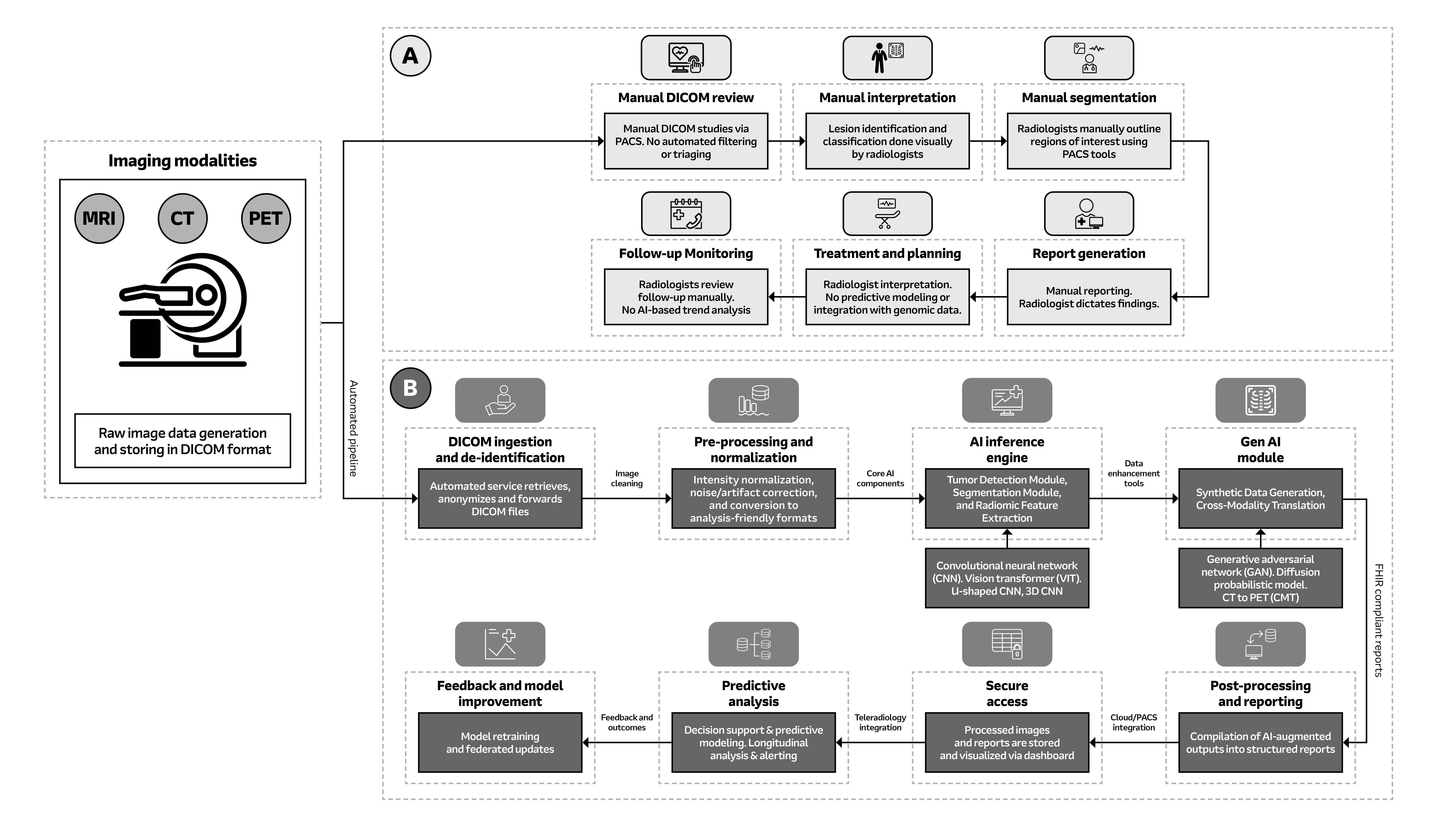

Fig. 1: System block diagram of oncology imaging: Figure A illustrates the conventional method of analysis while Figure B depicts AI-powered image analysis. 2025 Arrow Electronics, Inc., used with permission.

Embracing a Data-Driven Diagnostic Future

Rising radiology scan volumes, limited staffing, and pressure to deliver fast and accurate reads make it difficult to manually interpret large, heterogeneous imaging datasets with consistency. Clinicians have historically relied on their highly attuned personal skills, manually integrating various data points to derive insights through hands-on observation and examination. However, human data interpretation is limited by cognitive processing capacity and visual acuity when identifying subtle, emerging anomalies2.

AI and machine earning (ML) technologies identify concealed data patterns and abnormal data points which human vision fails to detect in complex high-dimensional information systems. Integrated diagnostic intelligence systems combine multiple clinical data sources through AI-based analytical capabilities for their design. The system enables better management of workflow operations, which results in enhanced operational precision and consistency.

AI and ML can enhance data utilization, supporting clinicians in analyzing and leveraging all resources at their disposal for patient care. Data-driven smart tools can offer clinical utility for managing progressive diseases within the field of radiology. For example, to help address coronary artery disease, smart systems could automatically measure coronary artery calcium (CAC) on incidental chest CT scans (i.e., scans done for other reasons, such as lung cancer screening). This would allow for earlier intervention by identifying high-risk patients who require referral to cardiology for lifestyle modifications or medications, enabling advanced decision making for preventative care, as well as allowing for risk prediction of future cardiac events like heart attack.

Conventional radiology setups would benefit from several other factors that could be used as an enhancement blueprint for transitioning to AI-driven workflows:

- Real-time Predictive Modeling: Linking to historical and genomic data to inform clinicians about potential disease progression based on AI-augmented inferences.

- Automated Triage or Filtering: Adding a layer to consider complex or urgent studies, which could increase time per study but would ultimately provide deeper perspectives.

- Quantitative Feature Extraction: Moving analysis from a more subjective base to a more objective foundation via high-dimensional radiomic biomarkers that AI can now provide3.

High-Sensitivity Medical Image Analysis

Detecting anomalies during the initial stages of their respective diseases through radiology imaging alone is likely one of the most challenging tasks for clinicians in the current diagnostic environment. This high-sensitivity image analysis is concerned with recognizing incredibly subtle and possibly low-contrast features, and early indicators of potential disease stages can’t always be classified or visualized by the naked eye. The identification of neurodegenerative disorders, cardiac diseases, and cancer becomes more efficient through AI analysis. However, there are a few technical, computational, and clinical obstacles that need to be addressed4:

Low Signal-to-Noise Ratio

Early-stage anomaly indications are visualized on radiology images as dim or dark patterns. As a result, for enhancing signal clarity, low-noise amplifiers and circuits with high common-mode rejection ratios are integrated into the hardware. While low signal-to-noise ratio (SNR) limits accuracy, multi-modal fusion can enhance early disease detection by combining complementary signals. AI-driven denoising and feature extraction improve SNR, enabling more reliable anomaly identification. This solution can block electrical interference and boost signal integrity in the readouts5.

Regardless of hardware solutions, physical and environmental processes make noise artifacts and protocol deviations an inseparable part of data acquisition. This is where AI models and their built-in algorithms offer potential to introduce another layer of computational power. Better detection of early tumor margin or inflammation biomarkers, versus normal tissues, will increasingly leverage computer-based pre-processing methods, as well as noise-tolerant neural networks.

Inter-Patient Anatomical Variability

The human body structure has evolved over time into a wide range of anatomical shapes and diversity due to various genetic, environmental, and lifestyle factors. These evolutionary features of anatomical characteristics present a challenging problem for current AI models, given existing datasets do not always offer a wide variety of anatomical structures.

Furthermore, variation in organ sizes, shapes, and placement can easily mask subtle indicators, increasing the likelihood of false positives and false negatives in diagnosing diseases. For AI models to continually improve, they must be trained on an expanded dataset that reflects the full spectrum of anatomical variability, encompassing variations in body habitus alongside relevant evolutionary perspectives6.

Morphological and Textural Deviations

In the early stages of a disease, the morphological and textural changes are often minimal and can easily go undetected. Images display small changes in brightness between pixels, slivers, or slightly uneven edges in tissues, or even minor tissue changes. Detecting these differences requires not only the use of high-resolution images but also the adoption of high-dimensionality feature extraction techniques such as texture-based radiomics, automated segmentation, and multiscale learning frameworks. Examples include 3D convolutional neural networks (3D CNNs) and variations of U-net networks (UNN).

High-Dimensional Data Complexity

3D MRI scans, PET-CT/MRI scans, and 4D CT scans come with extremely high and very complex multidimensional datasets. From an operational perspective, processing and analyzing such datasets for medical decision-making is an expensive task and offers a valid opportunity for AI solutions. But the complexity of the data also makes it difficult to train AI models that are both practical and effective.

Models without proper balance risk performing accurately in certain scenarios but struggle when presented with new, unseen clinical cases. Training AI models effectively requires large, balanced datasets as well as dimensionality-reduction techniques that keep clinical relevance intact while minimizing loss of information7.

Multi-Modality Data Fusion Challenges

Clinical decision-making is also very often dependent on combining separate sources of information, whether from different imaging modalities (for example, fusing structural MRI with metabolic PET data) or from different data modalities (such as fusing imaging data with genomic data, lab results, or electronic health records). The fusion of datasets is a practical challenge, given the differences in spatial resolution, timing of the data acquisition, and structural features. Advanced fusion strategies, such as attention-based networks and cross-modal transformers8, are essential for producing reliable results without loss of interpretability or information quality.

Labeled Training Data

Annotation is a time consuming, labor intensive, and costly task when clinical staff must manually tag and label volumes of images and substructures in a hierarchical or multi-level manner across a range of categories. This not only makes the whole process resource hungry, but also time intensive and prone to human error. A lack of high-quality training data with clean annotations could similarly bottleneck the clinical scalability for AI.

Training datasets can be augmented by leveraging techniques such as semi-supervised learning, transfer-learning from related domains, self-supervision, and generative models like generative adversarial networks (GANs)9. GANs in radiology generate authentic artificial medical images through their ability to produce synthetic medical images which duplicate actual patient information. The synthetic images serve as a solution to handle limited available data because they help researchers work with insufficient information about uncommon medical conditions while strengthening their models. GANs provide the ability to perform domain adaptation, which unites images obtained through different scanning systems and imaging techniques, and they allow for self-supervised training without needing complete human labeling. Healthcare organizations can speed up their AI development process through these combined methods, which reduce annotation requirements and enhance their ability to retrieve different data types.

Generalizability and Deployment Bias

Additional dataset bias can come from variability in scanner hardware and software (manufacturers, imaging protocols) along with patient demographics. AI models trained in one setup for a particular hardware configuration, or with narrow acquisition protocols for a specific clinical setting, can cause performance generalizability issues and fail to lend to adoption across wider customer bases and use cases. A fundamental selling point as AI models develop will be the ability to deploy solutions across clinical settings seamlessly without adding additional workflows or significantly changing infrastructures. Models with explainability features and clear methodologies (attention or saliency maps, heat maps, etc.) will be essential for seamless integration10.

Radiology workflows operate with standardized time-sensitive procedures, which prevent the implementation of AI solutions that require major system changes and new operational procedures. The main factor that will determine successful AI implementation in radiology involves creating a system which unites different imaging modalities (CT, MRI, X-ray) and operates within multiple vendor systems without causing any disruption to current workflows.

Radiologists need explainability features, which include attention maps, saliency maps, and heat maps to verify the accuracy of AI-generated results. The tools help doctors to understand the causes of model-based lesion or anomaly detection, fulfilling needs for both diagnostic confidence and transparency.

Transitioning to an AI-driven Radiology Workflow

While challenges in AI solutions must yet be addressed, AI is poised to change medical imaging practice over time, marking a paradigm shift from conventional step-by-step approaches and manual interpretation to a data-driven pipeline.

AI-driven Radiology Workflows

AI-driven radiology workflows can automate image analyses that are manual and time intensive, optimize processes to shortlist for review, and help clinicians focus their time and expertise on the actual review stage. AI modules also can be designed as context-adaptive and adaptable across modalities, while still sending clinical signals and data through the same scan-acquisition, review, and report stages that are foundational for any medical workflow.

- Automated Ingestion and Pre-Processing: Once diagnostic data is acquired in any of the above-discussed formats, the data is automatically ingested into the system, de-identified (for patient privacy), and subjected to thorough pre-processing and normalization. This brings a high degree of consistency across different modalities and acquisition conditions by standardizing the input for the subsequent AI inferences that follow.

- Core AI Inference: State-of-the-art CNNs, vision transformers (VITs), and other DL network architecture are at the front line of tumor detection and segmentation at the pixel level. These models are trained to be robust toward low SNR scenarios or small variations in contrast, and they can learn to spot features that are imperceptible to human eyes11.

- Radiomic Feature Extraction: Radiomic analysis now goes beyond visual interpretation to extract high-dimensional and quantitative biomarkers in an automated fashion. These objective metrics provide insights into tumor heterogeneity, shape, and growth that are hard to comprehend during manual review, and which add a very valuable layer of quantitative analysis to the diagnostic workflow12.

- Generative and Predictive Modules: Generative AI components in the system, including models for cross-modality synthesis, can also add important functionality by either synthesizing data or inferring properties, such as synthesizing a metabolic-like map from structural MRI scan. Prediction analysis can also provide real-time alerts, longitudinal analysis, etc., and help to extend the capability of static imaging review toward dynamic and predictive monitoring13.

- Structured Reporting and Integration: AI-driven results are standardized in a well-structured, interoperable report format (such as FHIR standards), which then are integrated with the PACS/RIS for clinicians to access in real-time.

Conclusion

AI solutions and workflows for medical imaging are not about replacing clinicians, but rather augmenting their time and expertise to extend beyond the limitations of human observation and analysis. AI solutions can provide objectivity with quantitative feature extraction capability, interoperability, standardized reporting, predictive modeling, and better scalability.

AI-driven imaging can further identify, catalogue, and systematize low-contrast features that human perception does not always detect. Features extracted in high fidelity with an added data layer will increase clinician confidence in diagnosing diseases. Findings and data can be used to develop more integrated imaging platforms for disease staging. The process of data integration between images and non-image data needs standardization to achieve platform and system interoperability.

This transition is not about simply adopting new technology. It’s about reimagining the whole diagnostic process and unlocking the future of more predictive, personalized, and precise patient care.

In part two of this article series, we will discuss the technical considerations and system architecture required for a modern AI-driven radiology workflow and how these elements are reshaping radiology practice.

References

- Rieke N, Hancox J, Li W, et al. The future of digital health with federated learning. NPJ Digital Medicine. 2020;3:119. doi:10.1038/s41746-020-00323-1. Accessed July 29, 2024.

- Zhang T, Fan S, Zhao J, et al. Challenges in AI-driven biomedical multimodal data fusion and analysis. Genomics, Proteomics & Bioinformatics. 2025;23(3):100742. doi:10.1016/j.gpb.2025.100742. Accessed July 29, 2024.

- Gillies RJ, Kinahan PE, Hricak H. Radiomics: Images Are More than Pictures, They Are Data. Radiology. 2016;278(2):563-577. doi:10.1148/radiol.2015151169.

- Kumar S. Early Disease Detection Using AI: A Deep Learning Approach to Predicting Cancer and Neurological Disorders. International Journal of Scientific Research and Management. 2025;13(04):2136-2155. doi:10.18535/ijsrm/v13i04.mp02. Accessed December 11, 2025.

- Zhao H, Nie D, Zhang H, et al. Multimodal brain image fusion based on improved rolling guidance filter and Wiener filter. Computational and Mathematical Methods in Medicine. 2022;2022:7545890. doi:10.1155/2022/7545890. Accessed July 29, 2024.

- Zech JR, Herbort A, O'Malley AJ. Bias and fairness in AI for medical imaging. Nature Medicine. 2022;28:2561–2565. doi:10.1038/s41591-022-02086-9. Accessed July 29, 2024.

- Zhang Y, Zhou Z, Liu Y, et al. Multi-modal medical data fusion and dimensionality reduction based on shared latent representation. International Journal of Telemedicine and Applications. 2020;2020:2901580. doi:10.1155/2020/2901580. Accessed July 29, 2024.

- Gómez-Pérez D, López-Crespo O, Gil-Pita R, et al. PET/MRI co-registration methods for medical applications: A comprehensive review. Journal of Translational Medicine. 2019;17:298. doi:10.1186/s12967-019-2039-7. Accessed July 29, 2024.

- Frid-Adar M, Diamant I, Klang E, et al. Generative adversarial networks for data augmentation in medical imaging: A review. IEEE Transactions on Medical Imaging. 2019;38:1265–1274. doi:10.1109/TMI.2018.2867350. Accessed July 29, 2024.

- Chen M, Dligach D, Lasko TA. Explaining AI predictions in clinical settings: A scoping review of current methods and challenges. Journal of Medical Systems. 2021;45:96. doi:10.1007/s10916-021-01729-2. Accessed July 29, 2024.

- Lambin P, Leijenaar RTH, Deist TM, et al. Radiomics: The bridge between medical imaging and personalized medicine. Nature Reviews Clinical Oncology. 2017;14:749–762. doi:10.1038/nrclinonc.2017.141. Accessed July 29, 2024.

- Kumar R, Yadav R, Khera PS, et al. Radiomics: a quantitative imaging biomarker in precision oncology. Nuclear Medicine Communications. 2022;43:483–493. doi:10.1097/MNM.0000000000001525. Accessed July 29, 2024.

- Zhou X, Li C, Wang S, Li Y, Tan T, Zheng H, Wang S. Generative Artificial Intelligence in Medical Imaging: Foundations, Progress, and Clinical Translation. arXiv preprint. 2025; arXiv:2508.09177v1. doi:10.48550/arXiv.2508.09177. Accessed December 11, 2025.